The Australian Parliament House Standing Committee on Employment, Education and Training inquiry into the use of generative artificial intelligence in the Australian education system has been going since May 2023. As part of the inquiry, I put in a submission which highlighted one aspect of generative AI that is now being identified as a real issue for young people, their families, and schools. This is the use of AI applications by school children (so far, boys by public accounts) to generate deepfake pornographic content of their female peers. Easy to use apps that can swap faces out and strip clothes of an image are out there and of course it was only a matter of time before children and young people found them. In my submission (dated July 18 2023) to the inquiry I wrote:

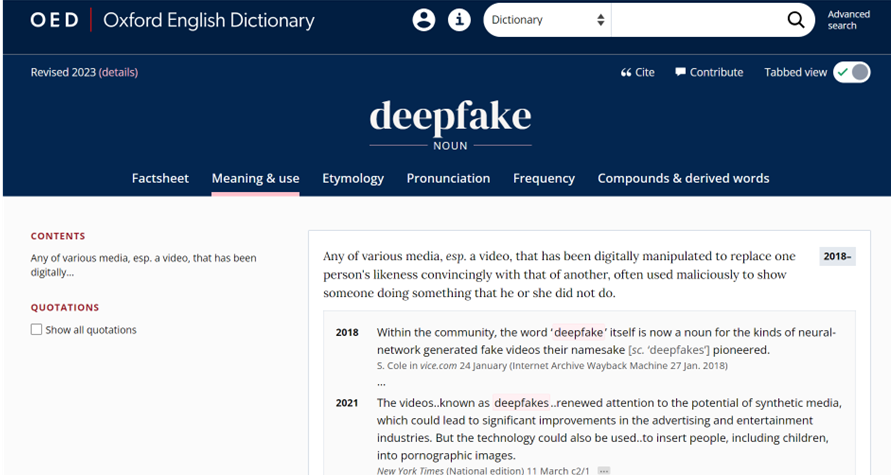

There are software applications that can be used to easily manipulate media to generate alternative and fake text, audio, images, and video. AI has and will be deployed in free or cheap applications that can create and distribute deepfakes with relative ease. This is already occurring with deep fake pornography that is used to victimise and ruin the lives of mainly women and girls. Deep fake audio is also being used for malicious and criminal enterprise. Deepfake apps will pose significant challenges to schools and other educational institutions as they are weaponised for bullying, harassment, and deception. The rapid human and bot spread of deep fakes will probably surpass the damage already occurring with student online bullying and will adversely affect staff who are targeted and the ethical culture of the educational institution. The anonymity through which deep fakes can be created will exacerbate the issue.

On September 24 2023 an article appeared on the BBC news website which reported:

A sleepy town in southern Spain is in shock after it emerged that AI-generated naked images of young local girls had been circulating on social media without their knowledge. The pictures were created using photos of the targeted girls fully clothed, many of them taken from their own social media accounts. These were then processed by an application that generates an imagined image of the person without clothes on. So far more than 20 girls, aged between 11 and 17, have come forward as victims of the app’s use … (A)t least 11 local boys have been identified as having involvement in either the creation of the images or their circulation via the WhatsApp and Telegram apps. Investigators are also looking into the claim that an attempt was made to extort one of the girls by using a fake image of her.

On October 5 2023, The Australian Newspaper published an article Rules to control the use of AI in schools which quoted the office of the Australian eSafety Commission and the Australian Human Rights Commission:

(T)he eSafety Commissioner warned of threats to teachers and students from deep-fake porn generated by AI. The commission’s executive manager, Paul Clark, told a federal parliamentary inquiry into the use of AI in education on Wednesday that it could be misused to “target” teachers. “We have now received our first complaint around AI-generated sexually explicit content produced by students to bully other students,’’ he said. “This is an open matter and we can’t discuss the detail and investigation. The issue of AI really accelerates the ability to manipulate voices and images, which we see as a potential risk to increase cyber bullying against students, but also the targeting of teachers.’’ Australian Human Rights Commissioner Lorraine Finlay warned the inquiry that safeguards must be built into AI technologies used by children.

On November 4 2023 CNN reported on a case U.S. of deepfake pornography with the article headline – “High schooler calls for AI regulations after manipulated pornographic images of her and others shared online”.

It isn’t a surprise that the deepfake pornography phenomenon has made its way into the lives of young people and schools. A recent article in Wired begins with

GOOGLE’S AND MICROSOFT’S search engines have a problem with deepfake porn videos. Since deepfakes emerged half a decade ago, the technology has consistently been used to abuse and harass women—using machine learning to morph someone’s head into pornography without their permission. Now the number of nonconsensual deepfake porn videos is growing at an exponential rate, fueled by the advancement of AI technologies and an expanding deepfake ecosystem.

The article reports on an analysis by a researcher, who wanted to remain anonymous due to fear of harassment, that showed that over the first nine months of 2023, 113,000 videos were uploaded to 35 deep fake porn video websites which is 54% increase from all of the previous year. It was forecasted that by the end of 2023 there would be more deep fake videos produced than in the total number of every other year combined.

This is of course not a new issue. There have been regulatory and legislative responses. Here is an article on Australia’s legislative response to deepfake pornography which discusses recourse to the Online Safety Act 2021 which provides civil penalties for the non-consensual sharing of intimate images including images which been altered. The Australian eSafety Commission provides recommendations to curb the harms of generative AI. The Safety Commission states that in August 2023 it received its “first reports of sexually explicit content generated by students using this technology to bully other students. That’s after reports of AI-generated child sexual abuse material and a small but growing number of distressing and increasingly realistic deepfake porn reports.” It goes on to say:

Let’s learn from the era of ‘moving fast and breaking things’ and shift to a culture where safety is not sacrificed in favour of unfettered innovation or speed to market. If these risks are not assessed and effective and robust guardrails integrated upfront, the rapid proliferation of harm escalates once released into the wild. Solely relying on post-facto regulation could result in a huge game of whack-a-troll… The tech trends position statement also sets out eSafety’s current tools and approaches to generative AI, which includes education for young people, parents, and educators; reporting schemes for serious online abuse; transparency tools; and the status of mandatory codes and standards.

There has certainly been voluntary agreements with big technology companies and the U.S. government about watermarking deepfake content. However, there are other companies producing uses for deep fake that won’t comply with this, and really what use is a watermark if you have been pornified – it is still violence. Teaching people how to spot or identify deepfakes is a good idea too but it isn’t prevention of violence. In addition, AI-powered bots can spread these deep fake images and videos at rapid speed, so watermarking and identification strategies are only partial solutions.

And pornographic content creation is really only the tip of this terrible iceberg. Imagine other other types of deep fakes that might be generated to discredit or vilify teachers and students.

There is of course a major pedagogical prevention project to be undertaken and the Australian Curriculum’s Digital Literacy and Ethical Understanding Capabilities have a major role in developing the types of knowledge , attitudes and behaviours that can prevent young people (mainly boys) from doing violence by producing and sharing deepfake porn generally and of their peers and teachers. There is also a place for understanding deepfakes in respect and consent education programs that are currently not well integrated into Australian schooling. Parental and care-giver education within school communities is vital too.

School policy-makers and leaders need to prepare their responses to the production and sharing of deepfake porn and other content that does violence to people in their school communities, right now. There is no time to wait.